2018 Dec New Fortinet NSE4 Exam Dumps wuth PDF and VCE Free Updated Today! Following are some new added NSE4 Exam Questions:

2018 Dec New Fortinet NSE4 Exam Dumps wuth PDF and VCE Free Updated Today! Following are some new added NSE4 Exam Questions:

2018 Dec New Fortinet NSE4 Exam Dumps wuth PDF and VCE Free Updated Today! Following are some new added NSE4 Exam Questions:

2018 Dec New Fortinet NSE4 Exam Dumps wuth PDF and VCE Free Updated Today! Following are some new added NSE4 Exam Questions:

2018 New Microsoft 70-776 Exam Dumps with PDF and VCE Free Released Today! Following are some new 70-776 Exam Questions:

2.2018 New 70-776 Exam Questions & Answers:

https://drive.google.com/drive/folders/191rIaTzbWdd9hNtirvjRzvhKTjl0Kgbk?usp=sharing

QUESTION 1

You are building a Microsoft Azure Stream Analytics job definition that includes inputs, queries, and outputs.

You need to create a job that automatically provides the highest level of parallelism to the compute instances.

What should you do?

A. Configure event hubs and blobs to use the PartitionKey field as the partition ID.

B. Set the partition key for the inputs, queries, and outputs to use the same partition folders. Configure the queries to use uniform partition keys.

C. Set the partition key for the inputs, queries, and outputs to use the same partition folders. Configure the queries to use different partition keys.

D. Define the number of input partitions to equal the number of output partitions.

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-parallelization

QUESTION 2

You manage an on-premises data warehouse that uses Microsoft SQL Server.

The data warehouse contains 100 TB of data. The data is partitioned by month. One TB of data is added to the data warehouse each month.

You create a Microsoft Azure SQL data warehouse and copy the on-premises data to the data warehouse.

You need to implement a process to replicate the on-premises data warehouse to the Azure SQL data warehouse. The solution must support daily incremental updates and must provide error handling.

What should you use?

A. the Azure Import/Export service

B. SQL Server log shipping

C. Azure Data Factory

D. the AzCopy utility

Answer: C

QUESTION 3

You plan to use Microsoft Azure Data factory to copy data daily from an Azure SQL data warehouse to an Azure Data Lake Store.

You need to define a linked service for the Data Lake Store. The solution must prevent the access token from expiring.

Which type of authentication should you use?

A. OAuth

B. service-to-service

C. Basic

D. service principal

Answer: D

Explanation:

https://docs.microsoft.com/en-gb/azure/data-factory/v1/data-factory-azure-datalake-connector#azure-data-lake-store-linked-service-properties

QUESTION 4

You have a Microsoft Azure Data Lake Store and an Azure Active Directory tenant.

You are developing an application that will access the Data Lake Store by using end-user credentials.

You need to ensure that the application uses end-user authentication to access the Data Lake Store.

What should you create?

A. a Native Active Directory app registration

B. a policy assignment that uses the Allowed resource types policy definition

C. a Web app/API Active Directory app registration

D. a policy assignment that uses the Allowed locations policy definition

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-end-user-authenticate-using-active-directory

QUESTION 5

You are developing an application that uses Microsoft Azure Stream Analytics.

You have data structures that are defined dynamically.

You want to enable consistency between the logical methods used by stream processing and batch processing.

You need to ensure that the data can be integrated by using consistent data points.

What should you use to process the data?

A. a vectorized Microsoft SQL Server Database Engine

B. directed acyclic graph (DAG)

C. Apache Spark queries that use updateStateByKey operators

D. Apache Spark queries that use mapWithState operators

Answer: D

QUESTION 6

You need to use the Cognition.Vision.FaceDetector() function in U-SQL to analyze images.

Which attribute can you detect by using the function?

A. gender

B. race

C. weight

D. hair color

Answer: A

QUESTION 7

You have a Microsoft Azure SQL data warehouse that contains information about community events.

An Azure Data Factory job writes an updated CSV file in Azure Blob storage to Community/{date}/events.csv daily.

You plan to consume a Twitter feed by using Azure Stream Analytics and to correlate the feed to the community events.

You plan to use Stream Analytics to retrieve the latest community events data and to correlate the data to the Twitter feed data.

You need to ensure that when updates to the community events data is written to the CSV files, the Stream Analytics job can access the latest community events data.

What should you configure?

A. an output that uses a blob storage sink and has a path pattern of Community/{date}

B. an output that uses an event hub sink and the CSV event serialization format

C. an input that uses a reference data source and has a path pattern of Community/{date}/events.csv

D. an input that uses a reference data source and has a path pattern of Community/{date}

Answer: C

!!!RECOMMEND!!!

2.2018 New 70-776 Study Guide Video:

2018 New Microsoft 70-776 Exam Dumps with PDF and VCE Free Released Today! Following are some new 70-776 Exam Questions:

2.2018 New 70-776 Exam Questions & Answers:

https://drive.google.com/drive/folders/191rIaTzbWdd9hNtirvjRzvhKTjl0Kgbk?usp=sharing

QUESTION 1

You are building a Microsoft Azure Stream Analytics job definition that includes inputs, queries, and outputs.

You need to create a job that automatically provides the highest level of parallelism to the compute instances.

What should you do?

A. Configure event hubs and blobs to use the PartitionKey field as the partition ID.

B. Set the partition key for the inputs, queries, and outputs to use the same partition folders. Configure the queries to use uniform partition keys.

C. Set the partition key for the inputs, queries, and outputs to use the same partition folders. Configure the queries to use different partition keys.

D. Define the number of input partitions to equal the number of output partitions.

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-parallelization

QUESTION 2

You manage an on-premises data warehouse that uses Microsoft SQL Server.

The data warehouse contains 100 TB of data. The data is partitioned by month. One TB of data is added to the data warehouse each month.

You create a Microsoft Azure SQL data warehouse and copy the on-premises data to the data warehouse.

You need to implement a process to replicate the on-premises data warehouse to the Azure SQL data warehouse. The solution must support daily incremental updates and must provide error handling.

What should you use?

A. the Azure Import/Export service

B. SQL Server log shipping

C. Azure Data Factory

D. the AzCopy utility

Answer: C

QUESTION 3

You plan to use Microsoft Azure Data factory to copy data daily from an Azure SQL data warehouse to an Azure Data Lake Store.

You need to define a linked service for the Data Lake Store. The solution must prevent the access token from expiring.

Which type of authentication should you use?

A. OAuth

B. service-to-service

C. Basic

D. service principal

Answer: D

Explanation:

https://docs.microsoft.com/en-gb/azure/data-factory/v1/data-factory-azure-datalake-connector#azure-data-lake-store-linked-service-properties

QUESTION 4

You have a Microsoft Azure Data Lake Store and an Azure Active Directory tenant.

You are developing an application that will access the Data Lake Store by using end-user credentials.

You need to ensure that the application uses end-user authentication to access the Data Lake Store.

What should you create?

A. a Native Active Directory app registration

B. a policy assignment that uses the Allowed resource types policy definition

C. a Web app/API Active Directory app registration

D. a policy assignment that uses the Allowed locations policy definition

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-end-user-authenticate-using-active-directory

QUESTION 5

You are developing an application that uses Microsoft Azure Stream Analytics.

You have data structures that are defined dynamically.

You want to enable consistency between the logical methods used by stream processing and batch processing.

You need to ensure that the data can be integrated by using consistent data points.

What should you use to process the data?

A. a vectorized Microsoft SQL Server Database Engine

B. directed acyclic graph (DAG)

C. Apache Spark queries that use updateStateByKey operators

D. Apache Spark queries that use mapWithState operators

Answer: D

QUESTION 6

You need to use the Cognition.Vision.FaceDetector() function in U-SQL to analyze images.

Which attribute can you detect by using the function?

A. gender

B. race

C. weight

D. hair color

Answer: A

QUESTION 7

You have a Microsoft Azure SQL data warehouse that contains information about community events.

An Azure Data Factory job writes an updated CSV file in Azure Blob storage to Community/{date}/events.csv daily.

You plan to consume a Twitter feed by using Azure Stream Analytics and to correlate the feed to the community events.

You plan to use Stream Analytics to retrieve the latest community events data and to correlate the data to the Twitter feed data.

You need to ensure that when updates to the community events data is written to the CSV files, the Stream Analytics job can access the latest community events data.

What should you configure?

A. an output that uses a blob storage sink and has a path pattern of Community/{date}

B. an output that uses an event hub sink and the CSV event serialization format

C. an input that uses a reference data source and has a path pattern of Community/{date}/events.csv

D. an input that uses a reference data source and has a path pattern of Community/{date}

Answer: C

!!!RECOMMEND!!!

2.2018 New 70-776 Study Guide Video:

2018 New Microsoft 70-775 Exam Dumps with PDF and VCE Free Released Today! Following are some new 70-775 Exam Questions:

2.2018 New 70-775 Exam Questions & Answers:

https://drive.google.com/drive/folders/1uJ5XlxxFUMLKFts7UH_t9bHMkDKxlWDT?usp=sharing

QUESTION 1

You plan to copy data from Azure Blob storage to an Azure SQL database by using Azure Data Factory.

Which file formats can you use?

A. binary, JSON, Apache Parquet, and ORC

B. OXPS, binary, text and JSON

C. XML, Apache Avro, text, and ORC

D. text, JSON, Apache Avro, and Apache Parquet

Answer: D

Explanation:

https://docs.microsoft.com/en-us/azure/data-factory/supported-file-formats-and-compression-codecs

QUESTION 2

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this sections, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are building a security tracking solution in Apache Kafka to parse security logs. The security logs record an entry each time a user attempts to access an application. Each log entry contains the IP address used to make the attempt and the country from which the attempt originated.

You need to receive notifications when an IP address from outside of the United States is used to access the application.

Solution: Create new topics. Create a file import process to send messages. Start the consumer and run the producer.

Does this meet the goal?

A. Yes

B. No

Answer: A

QUESTION 3

You use YARN to manage the resources for a Spark Thrift Server running on a Linux- based Apache Spark cluster in Azure HDInsight.

You discover that the cluster does not fully utilize the resources.

You want to increase resource allocation.

You need to increase the number of executors and the allocation of memory to the Spark Thrift Server driver.

Which two parameters should you modify? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. spark.dynamicAllocation.maxExecutors

B. spark.cores.max

C. spark.executor.memory

D. spark_thrift_cmd_opts

E. spark.executor.instances

Answer: AC

Explanation:

https://stackoverflow.com/questions/37871194/how-to-tune-spark-executor-number-cores-and-executor-memory

QUESTION 4

You have an Apache Hadoop cluster in Azure HDInsight that has a head node and three data nodes. You have a MapReduce job.

You receive a notification that a data node failed.

You need to identify which component cause the failure.

Which tool should you use?

A. JobTracker

B. TaskTracker

C. ResourceManager

D. ApplicationMaster

Answer: C

QUESTION 5

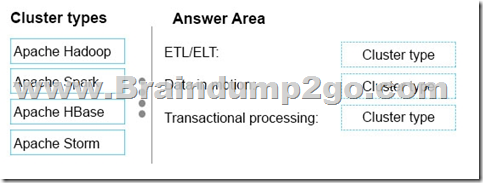

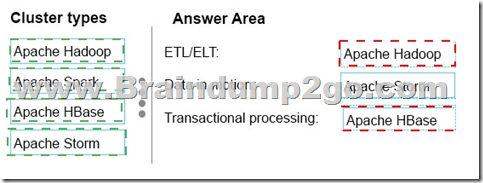

Drag and Drop Question

You are evaluating the use of Azure HDInsight clusters for various workloads.

Which type of HDInsight cluster should you create for each workload? To answer, drag the appropriate cluster types to the correct workloads. Each cluster type may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

https://www.blue-granite.com/blog/how-to-choose-the-right-hdinsight-cluster

!!!RECOMMEND!!!

2.2018 New 70-775 Study Guide Video: